What needs to be done to move A.I to the next level? Is it new innovations in A.I algorithms? Is it greater access to datasets? Is it reliability of the A.I systems? Is it better interpretability of what the A.I is doing? is higher interoperability? Is it more information on the predictability of the A.I? Is it a greater level of trustworthiness?

I argue it is all of these things and more. The evolution and I dare say revolution of the internet. Was brought on by the need of disparate networks and systems to interact and provide a reliable level of service to the users of the systems. A system that users could trust would allow them to work and communicate more effectively. The real accelerator was the creation of the web and when reliable user-friendly applications where developed to interact and utilize the potential of with the web, the internet exploded. [How the web went world wide] [Internet history has just begun]

We are now to that point with A.I systems. We have separate systems and datasets across a multitude of hosting platforms, numerous implementations, and various APIs. These systems are developed and cultivated by individuals and organizations based on their defined use cases. Many of which overlap, and knowledge sharing, resource sharing and cost saving opportunities exist. Unfortunately, many organizations implement their own versions of the same ML, or A.I systems. Incurring internal research and IT implementation cost. There are many driving factors for a costly inhouse implementation over utilizing a publicly available A.I. Some of the most apparent are Sensitivity of the data, reliability of the A.I., Security (eq., has malicious bad actor poisoned the A.I), Configurability & interoperability (A.I system is not adaptable to needs of the system) , Interpretability (understanding how it works , to explain to the stakeholders how they made a decision).

We also have many systems and datasets today that would benefit from A.I. Legacy Systems that would see the almost instant benefit from even some of the most basic ML libraries available today.

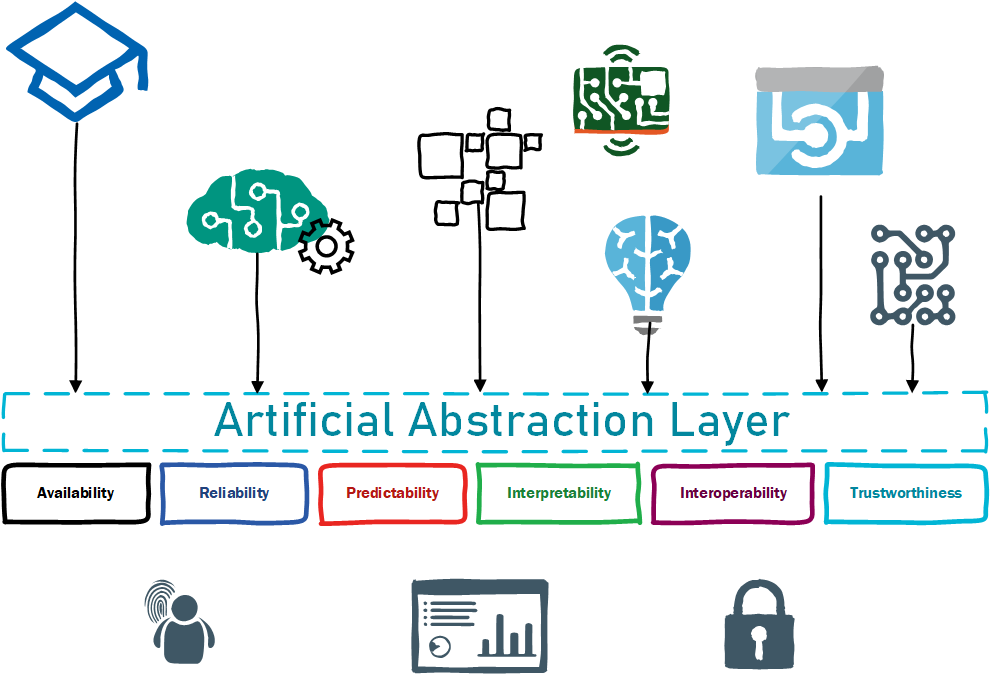

We need an Artificial Abstraction Layer (A.A.L) A system that would allow for the access and sharing of A.I resources. Algorithm compute services, datasets, pre-trained A.I services accessible through a secure standards approach that would provide for Availability, Reliability, Predictability, Interpretability, Interoperability, and Trustworthiness. Such a platform could be the springboard for new revolutionary steps in A.I allowing for growth and adoption not just in research but in practical applications.

At Lavaca Scientific we believe the first step in achieving such a system is establishing trust. Understating users and A.I. services and their level of trustworthiness. We are working to develop a distributed identity model utilizing A.I to learn and develop policy that governs and guides how AI system and user interact.